The first map new map was the "a-b terrain" map. This map splits the world into two vertical regions. The left side is densely populated with obstacles and the right is empty.

The first map new map was the "a-b terrain" map. This map splits the world into two vertical regions. The left side is densely populated with obstacles and the right is empty.

UCSC CMPS 146 Game AI

Spring 2006

Adam Smith (amsmith@cs.ucsc.edu)

source/binary package: tgrl.tgz

[Includes demos: tgrl-ab-grav, tgrl-trap-sprint and tgrl-stock-stock.]

This project explores using online learning techniques to learn a controller for the Tag Game automatically that decides actions based on experience under a variety of new game environments. In the stock version of the tag game, the included controllers are fairly effective using simple, hard-wired tactics. This project explores character control in environments where there are significant strategic or tactical opportunities that are not exploited but the included controllers. To allow for location-based strategy, two new non-uniform map types were added. Furthermore, to allow for smarter action choosing tactics, two new motion models were added as well. A new controller was created that learns to choose actions using online, reinforcement learning.

The sections following in this document describe the new environment the controller is expected to face, the design of the learning controller, implementation details, and experimental results.

The controllers included with the Tag Game are ignorant of their character's location in the world. Given that the stock maps are uniformly distributed (random) obstacles this approach is not bad. However, if maps have certain location-specific characteristics a controller should be able to exploit these to do better than the included controllers.

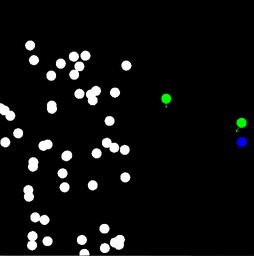

The first map new map was the "a-b terrain" map. This map splits the world into two vertical regions. The left side is densely populated with obstacles and the right is empty.

The first map new map was the "a-b terrain" map. This map splits the world into two vertical regions. The left side is densely populated with obstacles and the right is empty.

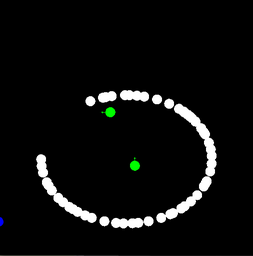

The second map new map was the "trap" map. In this map the world is free of randomly distributed obstacles but there is a clear C-shaped boundary enclosing the center region of the map.

The second map new map was the "trap" map. In this map the world is free of randomly distributed obstacles but there is a clear C-shaped boundary enclosing the center region of the map.

Another property of the included controllers is that they are all ignorant of absolute direction and mostly ignorant of range. Again, with the default motion model this approach is not surprising. This project introduces two new environments that could break assumptions in the included controllers.

To make absolute direction important gravity is added to the motion model. Under this model, characters (even the player character) can hardly manage more than hovering when they choose to move directly up, however motion to the sides is unaffected. A constant downward vector augments the character's acceleration to accomplish this. The max-velocity and max-force constraints in the simulator were unchanged so terminal velocity is reached after a short time. Horizontal arrangements of obstacles become more dangerous/useful in this model because they are much harder to avoid.

Adding the ability to sprint for short periods of time encourages awareness of range to targets. The characters use the sprint capability whenever their intended velocity is greater than half of its maximum value. In this way, the include controllers can use the sprinting ability. During sprinting, a character's maximum velocity and maximum force are increased by a large factor. Characters have a limited amount of sprint energy that refills with time. Using this ability in moderation would allow for the best maneuverability.

The new controller is primarily a state-based reactive controller. At fixed intervals the state of the world is sampled, quantized, and used to decide the movement action to be used for the next interval. Initially, the controller has no knowledge of the environment or its dynamics. This is desirable because this controller will be expected to adapt to several environments with their own dynamics. Sparse feedback is given when the controller's character becomes tagged or tags another character. In the many intervals between these feedback events the controller updates its knowledge by observing world state transitions.

For the purposes of the new controller, the Tag Game is modeled as a Markov Decision Process with a discrete domain for both the state and action space. Under this representation time is quantized into discrete steps as well.

To represent the state of the world for tactical purposes the following are sampled.

- tagged? (Yes/No) - nearest/tagged character heading (N/S/E/W) - nearest/tagged character range (Short/Long) - own heading (N/S/E/W) - own velocity (Low/High) - strategic map gradient direction (N/S/E/W) - strategic map local value (Low/High)

The possible actions that can be chosen by the new controller are the following.

- remain still - head north at full speed - head south at full speed - head east at full speed - head west at full speed

The tactical knowledge of the controller is represented as a value function, Q(s,a), the value of choosing action a when in state s. This function is stored as a large table that is initially filled with zeroed values. This value function is the focus of the learning as well as the control policy. The controller does possess strategic knowledge, however only the information on how to interpret its local value is encoded in the tactical knowledge representation.

The strategic knowledge of the world is encoded in two grid-based maps. These maps store how many characters have been tagged or were free at fixed intervals near the center of each grid location in the recent history of the game. Tagging or observed-evasion events increase the value stored for each grid location in their respective maps with a falloff that is a function of that grid location's distance from the event's location. Periodically, the maps are multiplied by a decay-constant to allow more recent observations to outweigh older observations. At action decision time, only the gradient and value of one of the map grid location's closest to the character's current position is sampled.

The new controller decides its actions following an epsilon-greedy policy. That is, most of the time the controller chooses the most valuable action for the given state using its current value function. However, with probability epsilon (small) it takes a random, exploratory action. This occasional exploration of a different action choice is crucial to the learning process.

While this policy is quite simple, it maps to a somewhat complex result in the actual game world. The actual velocity of the character is really a function of their actions over a noticeable time window, not just the last decision, because characters have acceleration limits.

The controller updates its knowledge using Q-learning. Q-learning is an off-policy TD(0) control algorithm (explained here). This algorithm provides an update rule for updating the value function using observed state transitions and reward values.

With respect to the Tag Game, the relatively complex physical motion model updates the controllers world state. Rewards are assigned after tagging events occur. A value of +1 is assigned when controller successfully gets it's character to tag another, and a value of -1 is used when the controller's character becomes tagged. No reward is provided other than during these events so that the controller can will not be able to find a way to maximize its expected reward without directly addressing the rules of the game.

For many of the changes to the Tag Game code, #ifdef statements guard the added code. The symbols used all begin with TGRL_ and are evident at the top of the affected files after the license notice. This pattern was chosen so that less logic would have to be added to existing code to pass along selection parameters for the new program behaviour.

The new maps were added to the Tag Game code by modifying the setupObstacles function inside of tagGame.cxx. A #define for TGRL_MAP for the values 0, 1, or 2 selects between the different obstacle placing code blocks.

The gravity effect was implemented by adding code to the processActions method inside of tgSimulator.cxx. A single parameter, TGRL_GRAVITY_BALANCE controls the degree of the gravity effect.

The sprinting ability required modification of several source files. A sprintEnergy field was added to all characters in tgCharacter.h. The initial value for this field was added in tgCharacter.cxx. Finally, the logic to use and update this field were added to the processActions method of tgSimulator.cxx. Two parameters, TGRL_MAX_ENERGY and TGRL_SPRINT_RATE control the degree of effect for these changes.

The learning controller code was organized into a new class that derives from the base tgController class. This controller provides an implementation for the all-important calcAction method, as well as provides additional functionality for file input and output for all of the knowledge representation. The class definition of the controller echoes the design described above but several problems arose before all of its logic was implemented.

In the current version of the code, the state space used by the controller is much simplified relative to the design. The controller is aware of only sixteen states. These are the cross product of the tagged-ness of the character and the quantized heading to the nearest character or tagged character depending on the first variable. In this model the strategic information is not used. As such, there is no code to update it.

The controller's behavior is parameterized by several values. The Q-learning algorithm depends primarily two parameters, alpha, a learning step size, and gamma, a discount rate. These parameters are currently tuned so that the controller will take obvious reactive measures when first tagged, despite these actions not always being useful. The exploratory action probability, epsilon, is currently set to a relatively high value (10%) so that the controller will quickly gain experience with all possible actions it can take. This, however, has a detrimental effect on learning because it weakens the connection between the value function and the choice of action. Rewards are occasionally misplaced because an action other than the expected one was taken. The finaly parameter is the time quantization value or thinkInterval as it is used in the code. This value, currently a quarter-second, determines how often knowledge update an action decision take place. Having a larger time interval decreases the effect of action averaging as discussed in the control section of the controller's design, but limits the rate of learning.

The resulting Tag Game project shows that there are, indeed, situations where the included controllers hard-wired decision-making process breaks down, however the new controller, as implemented, is not able to learn even to compete at the level of the included controllers. Several bugs remain to be discovered in the learning code and it is unclear how long it should be expected to take for the learning controller to converge to a competitive control policy. Furthermore, much of the large space of parameter values remains to be explored. It would have been interested to try initializing the value function for the learning controller with values that would produce an approximation to the other controller's tactics and seeing where they evolved from there. This, however, would have involved significant work that was more along the lines of offline learning to figure out how to encode those tactics in the model of the MDP assumed by the learning controller. With respect to the new maps and motion models, it would be possible to make environments that were even less favorable to the included controllers but favored an easily learnable new tactic, however these would hardly be considered variations of the Tag Game any more.